Posts: 244

Threads: 23

Joined: 2013-02-20

Another Idea to save traffic/overhead and reduce server load:

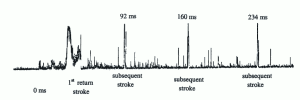

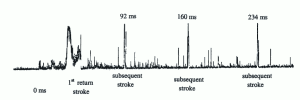

A lot of strikes have subsequent strikes following within miliseconds:

Maybe you can pool multiple signals together and only send data for example once every second.

Posts: 335

Threads: 11

Joined: 2014-07-21

mmm see the idea but what if we have a heavy storm with many strikes ... stations nearby won't be affected since going into saturation. But those that are at good distance for triangulation with precision ... imagine they all miss a few strikes due to this, in the end you won't ever have enough data for triangulation since each station will miss a few peaks but not necesarely the same ...

my 2 cents

Posts: 244

Threads: 23

Joined: 2013-02-20

2016-01-24, 10:14

(This post was last modified: 2016-01-24, 10:20 by Steph.)

I don't ment to throw away signals, but to pool them, so there is less IP/UDP overhead and the compression may be more efficient.

Posts: 2,178

Threads: 78

Joined: 2012-06-26

Pooling needs memory and the controller has very less memory. Saving a few bytes by reducing the overhead is not worth the memory. That's why we send the signals out as quickly as possible to have space left for the next ones. If not, then this will generate an overflow which you can see on the top of the status page.

Posts: 3

Threads: 0

Joined: 2016-01-14

(2016-01-20, 02:22)kevinmcc Wrote: (2016-01-17, 10:21)Bart Wrote: (2016-01-16, 09:16)kevinmcc Wrote: I was thinking QuickLZ, LZO, LZ4, LZ4-HC, or zlib for the the compression. My first concern would be how much data needs compressed and how much cpu power the Blitzortung receivers have. Would they be able to compress the data fast enough. The second concern is the the power needed to decompress at the server end. I agree some data is not very compressible, some is very easily compressible. I am still waiting for System Blue to launch so I can participate. Having not seen the data myself, I can not judge. Compression may not even be a worth while option.

I see a significant issue with those choices. These compression algorithms only become efficient if you build up data in a buffer for a while. It'd be quite efficient if you would lets say build up data one hour at a time and then all send it in bulk, but that'd delay the detection significantly. While I presume the target is to minimize latency. You really want stream compression algorithms for this sort of job, and there are a few near-ideal cases but these are bad to use in practice for data you *must have* due to their total lack for redundancy. If you're willing to sacrifice a few detections you could probably get away with it though.

You are assuming that you need a large collection of data to build a good dictionary for compression. If you use a predefined dictionary you do not need to time to build a dictionary as is done with typical compression methods. I am going to assume the Blitzertung data is quite predictable, in a predefined format, and good dictionary could be devised ahead of time. With a dictionary predefined the data being sent can be compressed and sent much quicker while still be very efficient in both time and size.

I'd say that heavily depends on the station environment, in a perfect vacuum far away from any other sources electromagnetic sources this would hold true; the real world is very different though. And yes, noise is a quantity you can statistically model, but the exact parameters of said model are quite location dependant. So unless you manage to throw out most of the noise this generally wouldn't work very well. Additionally your main overhead would remain: the packet headers for networking. That's why stream compression has a chance of working though, but it must be applied correctly (increase signal delay and buffer data).